My First Agent - Woz

Notes from vibe coding my first agent

Table of contents

- Goals

- Pre-requisites

- Problem

- Why it matters

- Story Evaluation

- Solution Approach

- Implementation

- Possible Enhancements

- Sample Results

Goals of This Exercise

This project was my first attempt at building an agent. The primary goals were:

- Learn to work with basic “vibe” coding – focus on getting something functional rather than over-engineered.

- Build the most minimal agent possible:

- Command-Line Interface (CLI) based

- No fancy user interface (UI) or visual components

- Keep dependencies minimal

- Integrate with Jira Cloud to read and update issues via API.

- Work within a strict time frame – complete the entire process, including this write-up, in 4–5 hours.

- Focus on learning over perfection – prioritize understanding the workflow of creating and testing an agent over building a production-ready tool.

Prerequisites

Before starting, complete the following setup steps:

1. Get an OpenAI API Key

- Sign up for an OpenAI account: https://platform.openai.com/.

- Choose the pay-as-you-go plan.

- Preload $5 credit to cover API usage for this experiment.

- Go to View API Keys in your OpenAI dashboard.

- Click Create New Secret Key, copy it, and store it securely — you’ll need it in your code.

2. Create a Free Jira Cloud Account

- Sign up at: https://www.atlassian.com/software/jira/free.

- Choose Jira Software (Scrum) during setup.

- Create a new project — for example,

WozProject. - Create at least one story/issue.

- Example: Story Name:

Connectivity_Story - Issue ID:

SCRUM-1

- Example: Story Name:

3. Generate a Jira API Token

- Go to: https://id.atlassian.com/manage/api-tokens.

- Click Create API Token.

- Copy the token and store it securely — you’ll use it with your Jira email for API requests.

4. Install the jq JSON Processor

- Check if

jqis already installed by running:

```bash jq –version if not installed run - winget install jqlang.jq

5. Install Visual Studio Code with Python

Download VS Code: https://code.visualstudio.com/. Install the Python extension for VS Code from the Extensions Marketplace. Ensure Python 3.10 or later is installed:

Problem Statement

In many enterprises, user stories are often written with vague language, missing details, or ambiguous acceptance criteria.

While these stories may pass initial review, their lack of clarity frequently leads to:

- Misinterpretation by developers and testers

- Misaligned expectations between business and technical teams

- Increased rework and costly delays in delivery

Poorly defined user stories can result in:

- Features that do not meet business needs

- Gaps in test coverage due to unclear acceptance criteria

- Unnecessary churn in sprint planning and backlog refinement

This project explores how even a simple AI-driven agent can help assess user story quality against objective measures, identify weaknesses early, and provide actionable feedback — reducing the risk of costly downstream errors.

Why This Matters

The quality of user stories directly impacts delivery timelines, development costs, and product quality.

A single poorly written story can trigger a chain reaction of misunderstandings, rework, and missed deadlines — all of which increase project risk and cost.

By introducing automated story evaluation:

- Clarity issues can be caught before sprint commitment.

- Ambiguous acceptance criteria can be flagged for refinement.

- Consistency in story quality can be maintained across teams and geographies.

- Data-driven feedback can help product owners and business analysts improve over time.

Evaluation Criteria

For this experiment, I had the AI agent evaluated stories against the following key quality dimensions:

- Readable – Is the story and its acceptance criteria easy to understand?

- Testable – Can the acceptance criteria be verified through testing?

- Implementation Agnostic – Does the story describe what to achieve, not how to do it?

- Actionable When Statement – Are the criteria tied to clear conditions for action?

- Strong Verb Usage – Are specific, active verbs used instead of vague terms like “should be”?

- Specific to the Story – Do the criteria directly relate to the story’s scope?

- Tell a Story – Does the acceptance criteria provide enough context to understand the user’s journey?

Each dimension was scored individually, and the total score provided a quick measure of overall quality, along with targeted improvement suggestions.

Solution Approach

To address the problem of substandard user stories, I built a minimal AI-driven agent capable of:

- Reading a Jira story and its acceptance criteria.

- Evaluating the story against predefined quality dimensions.

- Suggesting improvements based on the evaluation.

Key Design Principles

- Simplicity First – The agent is CLI-based, keeping the UI and dependencies minimal.

- Speed of Implementation – The entire build and documentation process was limited to 4–5 hours.

- API-Driven – The solution relies on Jira’s REST API for story retrieval and updates, and OpenAI’s API for evaluation logic.

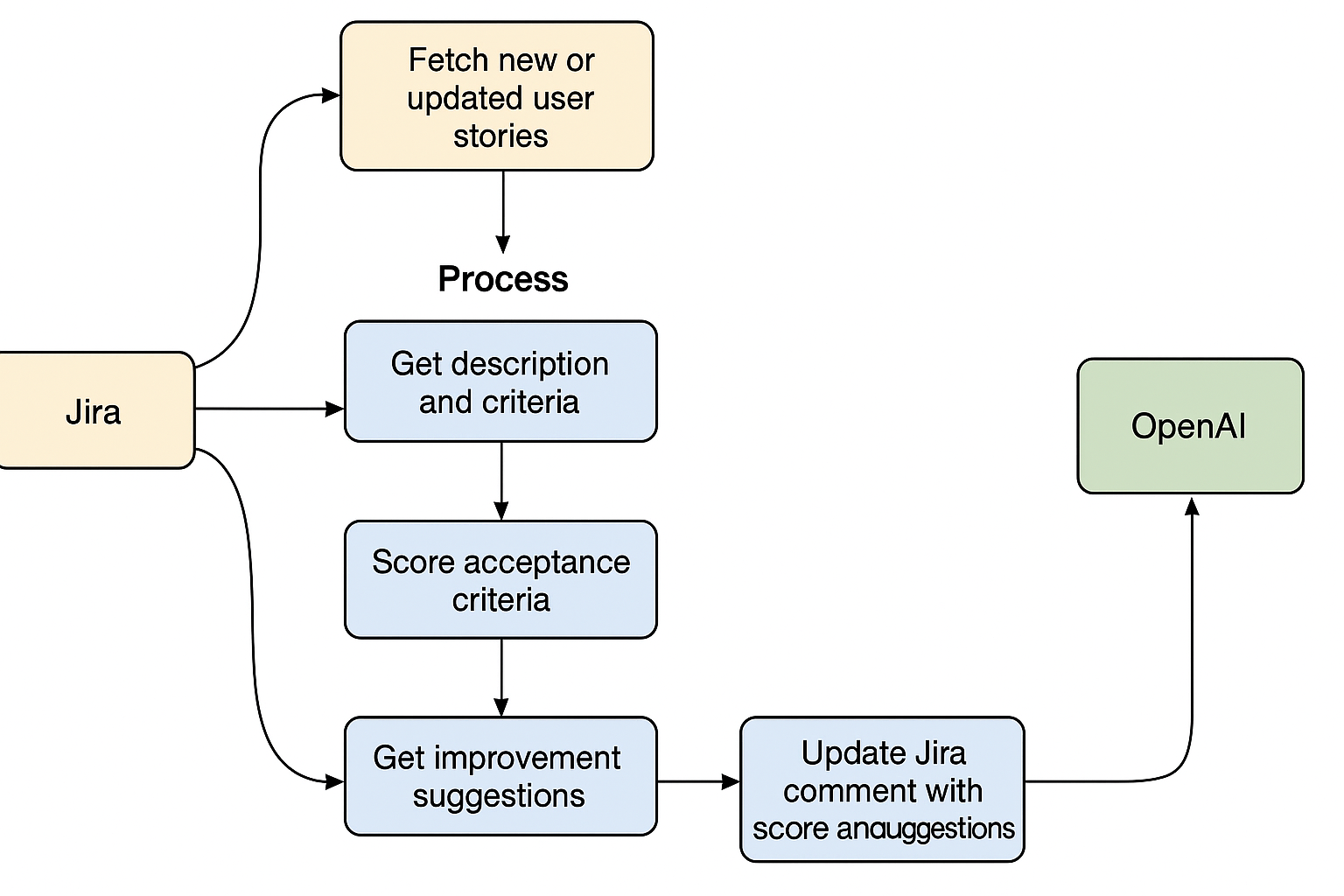

High-Level Workflow

- Retrieve Story Data – Connect to Jira Cloud and read the story’s summary and acceptance criteria.

- AI Evaluation – Send the text to the OpenAI API for scoring against the seven quality dimensions.

- Improvement Suggestions – Generate actionable feedback for each dimension.

- Optional Update – Push updated acceptance criteria or comments back into Jira.

Implementation

This Python script is the fourth iteration of a project where I built the functionality step by step, adding each piece incrementally to create a robust tool.

The script:

- Loads environment variables for Jira and OpenAI API credentials securely.

- Fetches a Jira story by its issue key using Jira’s REST API.

- Extracts the acceptance criteria description from Jira’s rich text format.

- Sends this description to OpenAI’s GPT-4 API to score it on key quality patterns and provide improvement feedback.

- Adds the AI-generated score and feedback as a comment on the Jira issue.

- Includes error handling and user-friendly command-line usage.

- Each iteration introduced new capabilities, making the script more functional and reliable

Enhancements

Possible enhancements -

- Automated Continuous Evaluation: Integrate the agent into the development workflow to automatically evaluate and provide feedback on user stories whenever they are created or updated, ensuring ongoing quality checks without manual triggers.

- Customizable Evaluation Criteria: Allow teams to define or adjust the acceptance criteria patterns and scoring parameters based on their specific processes or domain needs, making the agent adaptable across different projects or organizations.

- Advanced Reporting and Insights: Develop a dashboard or reporting system that aggregates evaluation results over time, highlighting trends, common issues, and areas for improvement to help teams enhance their agile practices strategically.

Sample Results

Lets assume you have the below story - Description: As a user, I want a login page so that I can log in. Acceptance Criteria: User can log in. Wrong password doesn’t work. It should look nice.

Fedback from Woz

Evaluation:

- Readable: 9/10 - The acceptance criteria are clear and easy to understand.

- Testable: 8/10 - Most of the criteria are testable, but it could be more specific about what constitutes “easy to navigate” or “an error message”.

- Implementation Agnostic: 10/10 - The criteria do not dictate how the functionality should be implemented.

- Actionable When Statement: 6/10 - The criteria could be more specific about when certain actions should occur.

- Strong Verb Usage: 7/10 - The criteria use verbs like “should be” and “should accept”, but could use stronger, more specific verbs.

- Specific to the Story: 8/10 - The criteria are specific to the user story, but could be more detailed.

- Tell a Story: 7/10 - The criteria tell a story, but it could be more engaging and detailed.

Overall Score: 55/70

Suggestions for Improvement:

- Be more specific about what constitutes “easy to navigate”. For example, “The login page should have clearly labeled fields for username and password.”

- Specify what the error message should say when incorrect credentials are entered.

- Include a criterion about what should happen when the ‘forgot password’ link is clicked.

- Use stronger verbs. For example, instead of “should be”, use “must be”.

- Make the story more engaging by adding more detail. For example, “As a user who has forgotten their password, I want to be able to click on a ‘forgot password’ link and be guided through the process of resetting my password.”